How DeepSeek Rewrote the Transformer [MLA]

Welch Labs

@welchlabsvideoAbout

New Book! The Welch Labs Illustrated Guide to AI is now available for pre-order: https://www.welchlabs.com/resources/ai-book

Latest Posts

Video Description

Thanks to KiwiCo for sponsoring today’s video! Go to https://www.kiwico.com/welchlabs and use code WELCHLABS for 50% off your first monthly club crate or for 20% off your first Panda Crate! MLA/DeepSeek Poster at 17:12 (Free shipping for a limited time with code DEEPSEEK): https://www.welchlabs.com/resources/mladeepseek-attention-poster-13x19 Limited edition MLA Poster and Signed Book: https://www.welchlabs.com/resources/deepseek-bundle-mla-poster-and-signed-book-limited-run Imaginary Numbers book is back in stock! https://www.welchlabs.com/resources/imaginary-numbers-book Special Thanks to Patrons https://www.patreon.com/c/welchlabs Juan Benet, Ross Hanson, Yan Babitski, AJ Englehardt, Alvin Khaled, Eduardo Barraza, Hitoshi Yamauchi, Jaewon Jung, Mrgoodlight, Shinichi Hayashi, Sid Sarasvati, Dominic Beaumont, Shannon Prater, Ubiquity Ventures, Matias Forti, Brian Henry, Tim Palade, Petar Vecutin, Nicolas baumann, Jason Singh, Robert Riley, vornska, Barry Silverman, Jake Ehrlich References DeepSeek-V2 paper: https://arxiv.org/pdf/2405.04434 DeepSeek-R1 paper: https://arxiv.org/abs/2501.12948 Great Article by Ege Erdil: https://epoch.ai/gradient-updates/how-has-deepseek-improved-the-transformer-architecture GPT-2 Visualizaiton: https://github.com/TransformerLensOrg/TransformerLens Manim Animations: https://github.com/stephencwelch/manim_videos Technical Notes 1. Note that DeepSeek-V2 paper claims a KV cache size reduction of 93.3%. They don’t exactly publish their methodology, but as far as I can tell it’s something likes this: start with Deepseek-v2 hyperparameters here: https://huggingface.co/deepseek-ai/DeepSeek-V2/blob/main/configuration_deepseek.py. num_hidden_layers=30, num_attention_heads=32, v_head_dim = 128. If DeepSeek-v2 was implemented with traditional MHA, then KV cache size would be 2*32*128*30*2=491,520 B/token. With MLA with a KV cache size of 576, we get a total cache size of 576*30=34,560 B/token. The percent reduction in KV cache size is then equal to (491,520-34,560)/492,520=92.8%. The numbers I present in this video follow the same approach but are for DeepSeek-v3/R1 architecture: https://huggingface.co/deepseek-ai/DeepSeek-V3/blob/main/config.json. num_hidden_layers=61, num_attention_heads=128, v_head_dim = 128. So traditional MHA cache would be 2*128*128*61*2 = 3,997,696 B/token. MLA reduces this to 576*61*2=70,272 B/token. Tor the DeepSeek-V3/R1 architecture, MLA reduces the KV cache size by a factor of 3,997,696/70,272 =56.9X. 2. I claim a couple times that MLA allows DeepSeek to generate tokens more than 6x faster than a vanilla transformer. The DeepSeek-V2 paper claims a slightly less than 6x throughput improvement with MLA, but since the V3/R1 architecture is heavier, we expect a larger lift, which is why i claim “more than 6x faster than a vanilla transformer” - in reality it’s probably significantly more than 6x for the V3/R1 architecture. 3. In all attention patterns and walkthroughs, we’re ignoring the |beginning of sentence| token. “The American flag is red, white, and” actually maps to 10 tokens if we include this starting token, and may attention patterns do assign high values to this token. 4. We’re ignoring bias terms matrix equations. 5. We’re ignoring positional embeddings. These are fascinating. See DeepSeek papers and ROPE.

You May Also Like

Transform Your AI Learning Today

AI-recommended products based on this video

LEGO City Mobile Police Dog Training 60369, SUV Toy Car with Trailer, Obstacle Course and Puppy Figures, Animal Playset for Boys and Girls Ages 5 Plus

soundcore by Anker P20i True Wireless Earbuds, 10mm Drivers with Big Bass, Bluetooth 5.3, 30H Long Playtime, IPX5 Water-Resistant, 2 Mics for AI Clear Calls, 22 Preset EQs, Customization via App

The Pandy - Emotional Healing on Demand, Realistic Panda Plush for Emotional Support, Mimics Natural Movements for Realistic Comfort, Pandy Ai Plush with Ultra-Soft Fur, The Comfort Hug You Have

Seasonic Focus V4 GX-1000 (ATX3) - 1000W - 80+ Gold - ATX 3.0 & PCIe 5.1 Ready -Full-Modular -ATX Form Factor -Premium Japanese Capacitor -10 Year Warranty -Nvidia RTX 30/40 Super & AMD GPU Compatible

Skytech Legacy Gaming PC Desktop, Ryzen 7 9800X3D 4.7 GHz (5.2 GHz Turbo Boost), NVIDIA RTX 5080 16GB GDDR7, 1TB Gen4 SSD, 32GB DDR5 RAM 6000 RGB, 1000W Gold ATX 3.0 PSU, 360 ARGB AIO, Wi-Fi, Win 11

Asus Dual NVIDIA GeForce RTX 3050 6GB OC Edition Gaming Graphics Card - PCIe 4.0, 6GB GDDR6 Memory, HDMI 2.1, DisplayPort 1.4a, 2-Slot Design, Axial-tech Fan Design, 0dB Technology, Steel Bracket

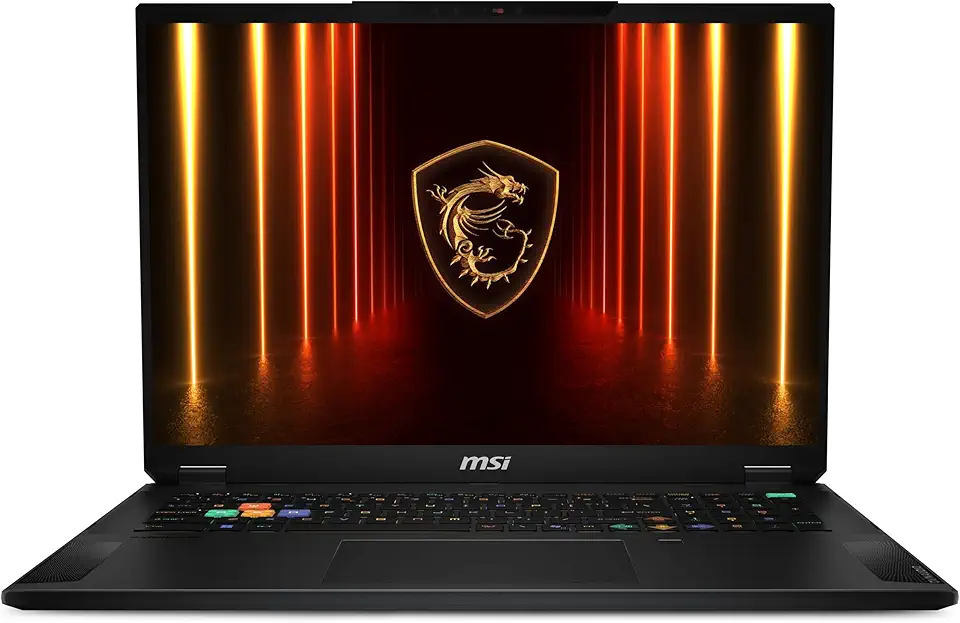

MSI Stealth 18 HX AI 18” 240Hz QHD+ Gaming Laptop: Intel Ultra 9-275HX, NVIDIA Geforce RTX 5080, 32GB DDR5, 2TB NVMe SSD, Wi-Fi 7, Win 11 Home :Midnight Black A2XWIG-045US

Dell 24 Monitor - SE2425HM - 23.8-inch Full HD (1920x1080) 16:9 100Hz Display, IPS Panel, 16.70 Million Colors, Anti-Glare, 1 HDMI / 1 VGA Port, TÜV Rheinland 3-Star*, Comfortview Plus - Black

Dell S2425HS Monitor - 23.8 Inch, FHD (1920x1080) Display, 100Hz Refresh Rate 1500:1 Contrast Ratio, TÜV Rheinland Eye Comfort 4 Star, Integrated 2x5W Speaker, Height/Tilt/Swivel/Pivot - Ash White

USB C Docking Station Dual Monitor for Dell Hp,15-in-1 Laptop Docking Station 3 Monitors USB C Hub with Dual 4K HDMI,8K DP,Button,PD Charging,Ethernet,6 USB A&C,SD/TF, Audio USB-C Multiport Adapter

Dell 24 Monitor - P2425H EPEAT

Logitech M185 Wireless Mouse, 2.4GHz with USB Mini Receiver, 12-Month Battery Life, 1000 DPI Optical Tracking, Ambidextrous, Compatible with PC, Mac, Laptop - Black

Logitech G203 Wired Gaming Mouse, 8,000 DPI, Rainbow Optical Effect LIGHTSYNC RGB, 6 Programmable Buttons, On-Board Memory, Screen Mapping, PC/Mac Computer and Laptop Compatible - Black

Logitech G305 Lightspeed Wireless Gaming Mouse, Hero 12K Sensor, 12,000 DPI, Lightweight, 6 Programmable Buttons, 250h Battery Life, On-Board Memory, PC/Mac - Black

Logitech G502 Hero High Performance Wired Gaming Mouse, Hero 25K Sensor, 25,600 DPI, RGB, Adjustable Weights, 11 Programmable Buttons, On-Board Memory, PC/Mac, Black

![The Misconception that Almost Stopped AI [How Models Learn Part 1]](https://imgz.pc97.com/?width=500&fit=cover&image=https://i.ytimg.com/vi/NrO20Jb-hy0/hqdefault.jpg)

![The Dark Matter of AI [Mechanistic Interpretability]](https://imgz.pc97.com/?width=500&fit=cover&image=https://i.ytimg.com/vi/UGO_Ehywuxc/hqdefault.jpg)

![The most beautiful equation in math, explained visually [Euler’s Formula]](https://imgz.pc97.com/?width=500&fit=cover&image=https://i.ytimg.com/vi/f8CXG7dS-D0/hqdefault.jpg)

![The moment we stopped understanding AI [AlexNet]](https://imgz.pc97.com/?width=500&fit=cover&image=https://i.ytimg.com/vi/UZDiGooFs54/hqdefault.jpg)